Memory Management in Operating System Tutorial Notes with Examples

Memory Management

memory management techniques allow several processes to share memory. When several processes are in memory they can share the CPU thus increasing CPU utilization.

Overlays

This techniques allow to keep in memory only those instructions and data Which are required at given time.

The other instruction and data is loaded into the memory space occupied by the previous ones when they are needed.

Swapping

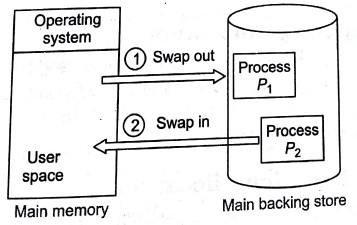

Consider an environment which supports multiprogramming using say Round–Robin (RR) CPU scheduling algorithm. Then, when one process has finished executing for

one time quantum, it is swapped out of memory to a backing store.

The memory manager then picks up another process from the backing store and loads it into the memory occupied by the previous process. Then the scheduler picks up another process and allocates the CPU to it._____

Logical versus Physical Address

An address generated by the CPU is commonly referred to as a logical address whereas an address seen by the memory unit is commonly referred to as physical address.

Memory Management Techniques

The main memory must accommodate both the operating system and the various user processes. The parts of the main memory must be allocated in the most efficient way possible.

There are two ways for memory allocation as given below

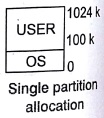

Single Partition Allocation

The memory is divided into two parts. One to be used by OS and the other one is for user programs. The OS code and date is protected from being modified by user programs using a base register.

Multiple Partition Allocation

The multiple partition allocation may be further classified as

Fixed Partition Scheme

Memory is divided into a number of fixed size partitions. Then, each partition holds one process. This scheme supports multiprogramming as a number of processes may how be brought into memory and the CPU can be switched from one process to another.

When a process arrives for execution, it is put into the input queue of the smallest partition, which is large enough to hold it.

Variable Partition Scheme

A block of available memory is designated as a hole at any time, a set of holes exists, which consists of holes of various sizes scattered throughout the memory.

Hock of

When a process arrives and needs memory, this set of holes is searched for a hole which is large enough to hold the process. If the hole is too largo, it is split into two parts. The unused part is added to the set of holes. All which are adjacent to each other are merged.

Searching for Hole in the Set

There are several algorithm which are used to search for the hole in the set.

- First fit This allocates the first hole in the set, which is big enough to hold the process.

- Next fit This works like the first fit algorithm except that it keeps track of the position of the hole found in the set. The next time it is called it starts searching from that position.

- Best fit This allocates the smallest hole, which is large enough to hold the process. ;

- Worst fit This algorithm simply allocates the largest hole.

Disadvantages of Memory Management Techniques

The above schemes cause external and internal fragmentation of the memory as given below

External Fragmentation: When there is enough total memory in the system to satisfy the requirements of a process but the memory is not contiguous.

Internal Fragmentation: The memory wasted inside the allocated blocks of memory called internal fragmentation.

e.g., consider a process requiring 150 k, thus if a hold of size 170 k is allocated to it the remaining 20 k is wasted.

Compaction

This is strategy to solve the problem of external fragmentation. All free memory is placed together by moving processes to new locations.

Paging

allocated

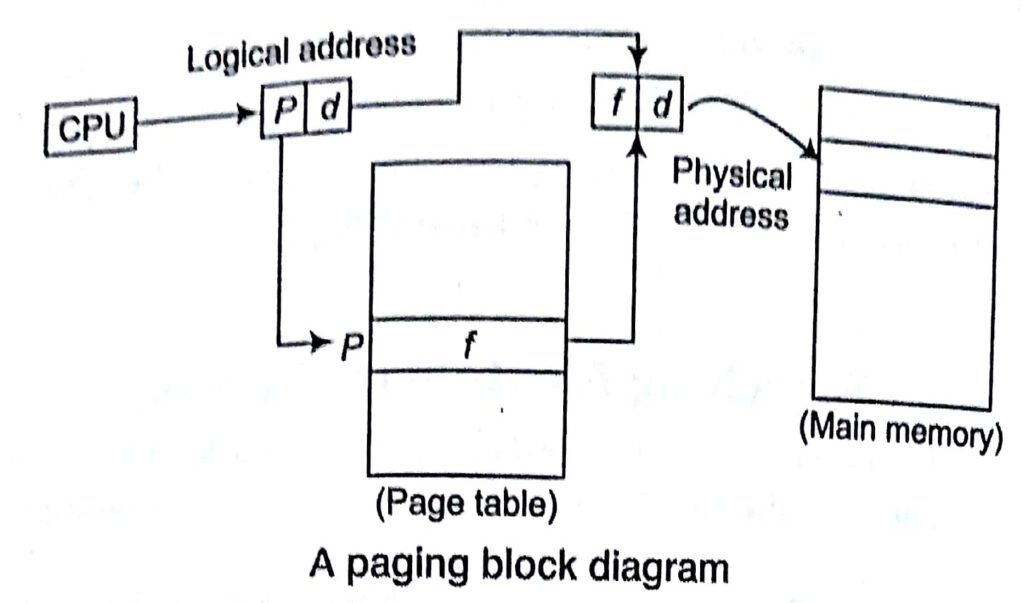

It is a memory management technique, which allows the memory to be allocated to the process wherever it is available. Physical memory is divided into fixed size blocks called frames. Logical memory is broken into block of same size called pages. The backing store is also divide into same size blocks.

When a process is to be executed its pages are loaded into available page frames. A frame is a collection of contiguous pages. Every logical address generated by the CPU is divided into two parts. The page number (P) and the page offset (d). the page number is used as an index into a page table

Each entry in the page table contains the base address of the page in physical memory (f). The base address of the Path entery is then combined with the offset (d) to give the actual address in memory.

Key Points

- The size of a page is typically a power of 2. The selection of a power of 2 as a page size makes the translation of a logical address into a page number and page off set, particularly easy.

- If the size of logical address space is 2′ and a page size is 2″ addressing units (bytes or words), then the high order (m-n) bits of a logical address designates the page number and the n low- order bits designates the page offset.

Advantages of Paging

The following are the advantages of paging

It allows the memory of a process to be non-contiguous. This also solves the problem of fitting or storing memory blocks of varying sizes in secondary memory (such as backing store).

Paging also allows sharing of common code. Two or more processes are allowed to execute the same code at the same time.

Virtual Memory

Separation of user logical memory from physical memory. It is a techniclory run process size more than main memory. Virtual memory is a memory management scheme which allows the execution of a partially loaded Process.

Advantages of Virtual Memory

The advantages of virtual memory can be given as

- Logical address space can therefore be much larger than physical address space.

- Allows address spaces to be shared by several processes.

- Less I/O is required to load or swap a process in memory, so each user can run faster.

Segmentation

- Logical address is divided into blocks called segment e., logical address space is a collection of segments. Each segment has a name and length.

- Logical address consists of two things

< segment_number, offset>

- Segmentation is a memory-management scheme that supports the following user view of All the location within a segment are

placed in contiguous location in primary storage.

Demand Paging

Virtual memory can be implemented by using either of the below.

(i) Demand paging (ii) Demand segmentation

Demand paging is combination of swapping and paging. With demand paged virtual memory, pages are only loaded when they are demanded during program execution. As long as we have no page faults, the effective access time is equal to the memory access time. If however, a page fault occurs, we must first read the relevant page from disk and then access the

desired word.

Effective Access Time

Effective access time = [((1 — p) x ma) + (p x page fault time))]

Here, ma = Memory access time

p = The probability of page fault (0 ≤ p ≤ 1)

If p = 0, it means no page faults.

If p = 1 every _ _ p to be close to zero that is there will be only few page fault

We expect p to be close to zero that is there will b e only few page faults.

Page Replacement

In a multiprogramming environment, the following scenario often result While execution of a process, page fault occurs and there are no frames on the free frame list. This is called over allocation of memory results due to increase in the degree of multiprogramming. page replacement is a technique to solve this problem.

Key Points –

- Paging and segmentation implement non-contiguous allocation of memory

- Logical memory is divided into blocks called pages physical memory is divided into fixed sized blocks known as frames.

Concept

If no free frame is available, a frame is found which is not in use. Th. contents of the frame are written onto a backing store and the page tables are modified to indicate that the page is no longer in memory. Thus, the frame can be used to hold the page for which the page fault was generated.

One bit is associated with each frame. If the bit is 1, this implies that the contents of the page was modified this is called the dirty bit. If the dirty bit is 0, the content of the frame need not be written to the backing store.

Page Replacement Algorithms

In a computer operating system that uses paging for virtual memo!) management, page replacement algorithms decide which memory pages to page out when a page of memory needs to be allocated.

First In First Out (FIFO)

A FIFO replacement algorithm associates with each page, the time when

that page was brought into memory. When a page .must be replaced, Itl- oldest page is chosen.

- It is not strictly necessary to record the time, when a page is brought, in We can create a FIFO queue to hold all pages in memory. We replace the page at the head of the queue. When a page is brought into memory insert it at the tail of the queue.

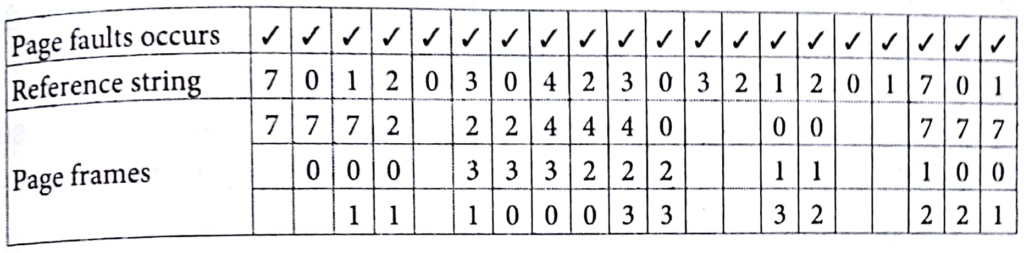

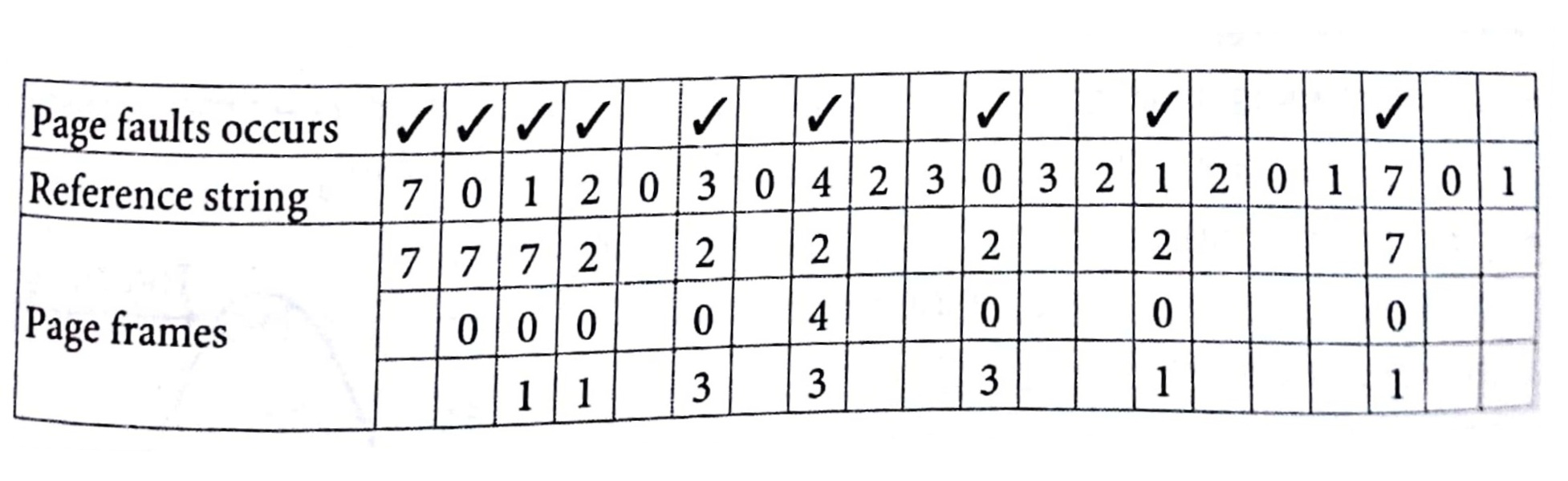

e.g., Consider the reference string

7, 0, 1, 2, 0, 3, 0 4, 2, 3, 0 3, 2, 1, 2, 0, 1, 7, 0, 1

Initially our 3 frames (frame size = 3) are empty. The first 3 references

(7, 0, 1) cause page faults and are brought into these empty frames. The

next reference (2) replaces page 7, because page 7 was brought in first.

Since, 0 is the next reference and 0 is already in memory, we have no fault

for this reference. The first reference to 3 results in replacement of page 0,

since it is now first in line. Because of this replacement, the next reference

to 0, will fault. Page 1 is then replaced by page 0. This process continues as shown in below figure.

Total number of page faults in the above example =15

Optimal Page Replacement

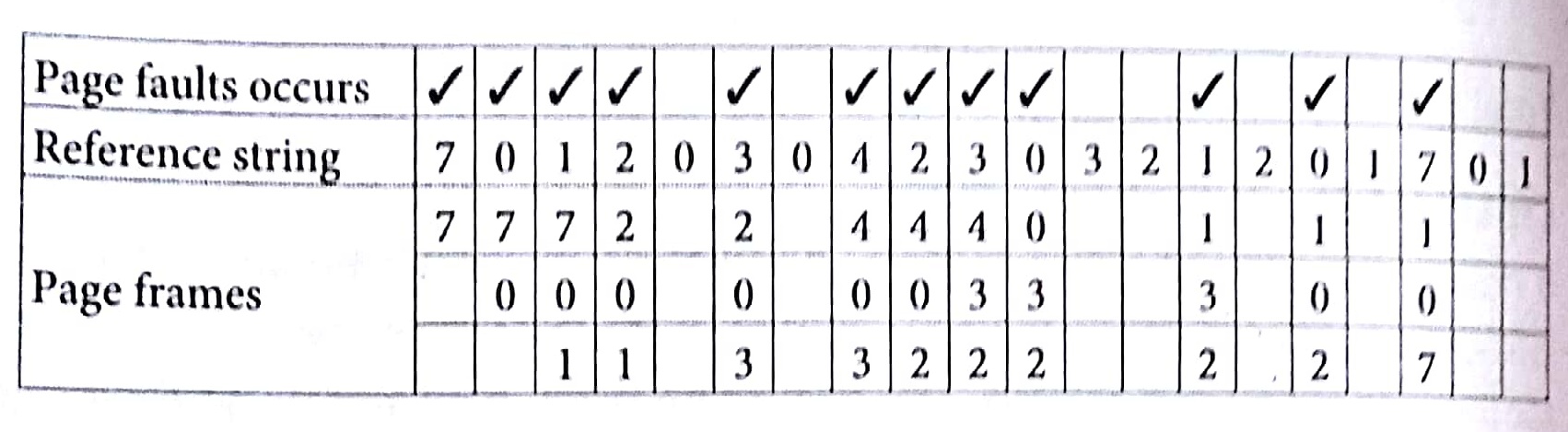

It has the lowest page fault rate of all algorithms and will never suffer from Belady’s .anomaly. This algorithm replaces the page that will not be used for the longest period of time. This algorithm gives a lowest page fault rate. It is very difficult to implement because, if requires future knowledge about the usage of the page.

e.g., Using the previous reference string, the optimal page replacement algorithm would field 9 page faults. The first 3 references cause faults that fill the 3 empty frames. The reference to page 2 replaces page 7, because page 7 will not be used until reference 18, whereas page 0 will be used at 5 and page 1 at 14. The reference page 3 replaces page 1, as page 1 will be the last of the 3 pages in memory to be referenced again.

Key Point

Key Point

- Beladys’ Anomaly For some page replacement algorithms, the page Lac 1.ttl‘ may increase as the number of allocated frames increases.

- This phenomenon known as Belady’s anomaly.

Least Recently Used (LRU) Page Replacement

In this, we use the recent past as an approximation of the near future, then we can replace the page that has not been user for the longest period of time.

e.g., we are considering the previous reference string (used in optimal page replacement algorithm), the first 5 faults are the same as those for optimal replacement. When the reference to page 4 occurs, however LRU replacement sees that of the 3 frames in the memory, page 2 was used least recently, Thus, the LRU algorithm replaces page 2, not knowing that page 2 is abut to be used, When it then fallout for page 2, the LRU algorithm replaces page 3, since it is how the least recently used of the three pages in memory. Consider frame size = 3 for example given below.

Frame Allocation

Frame Allocation

The maximum number of frames that can he allocated to a process depend on the size of the physical memory (i.e, total number of frames available). The minimum number of frames which can be allocated to a process depend on the instruction set architecture.

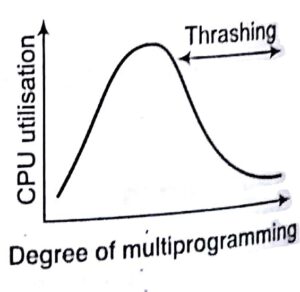

Thrashing

- A process is said to be thrashing when it is spending more time in paging (i.e., it is generating a lot of page faults) than

- Thrashing causes low CPU utilization. The processes which are thrashing queue up for the paging device.

- The CPU schedule sees the empty ready queue and tries to increase the degree of multiprogramming by introducing more processes in the system. These new processes cause more page faults and increase the length queue for the paging

Sorting in Design and Analysis of Algorithm Study Notes with Example

Learn Sorting in Handbook Series: Click here

Follow Us on Social Platforms to get Updated : twiter, facebook, Google Plus